Business Challenge

A telecommunications company needed a solution to manage cell tower and communication junction station assets. Site capture needed to be done from a mobile device for cost-effectiveness and drone restrictions in urban areas. The solution needed to assist with:

- Asset Verification

- Component Inventory

- Condition Documentation

- Work Order Management

TLDR: Laan Labs develops a mobile capture and rendering workflow that helps a TelCo organization collect the measurement data they need through LIDAR meshes and visual information through image annotations, 360 Photos as well as NeRF's and Gaussian Splatting.

Summary

A Telecommunications Infrastructure client of Laan Labs had been successfully using a custom licensed version of 3D Scanner App in their business processes for field asset management. They did have a number of key challenges the Laan Labs helped them overcome by adding scan photo annotations where during the scan process the user could define a 3D Point and make a photo annotation with notes on key asset parts. Because visual assessment was such a critical part of the process 360 Photo capture was also added to the workflow. Then with the recent improvements of NeRF's (Neural radiance fields) and Gaussian Splatting those were added as outputs so remote users could better inspect assets as these technologies have some advances over traditional photogrammetry techniques in displaying reflective surfaces, backgrounds, and fine details.

Approach

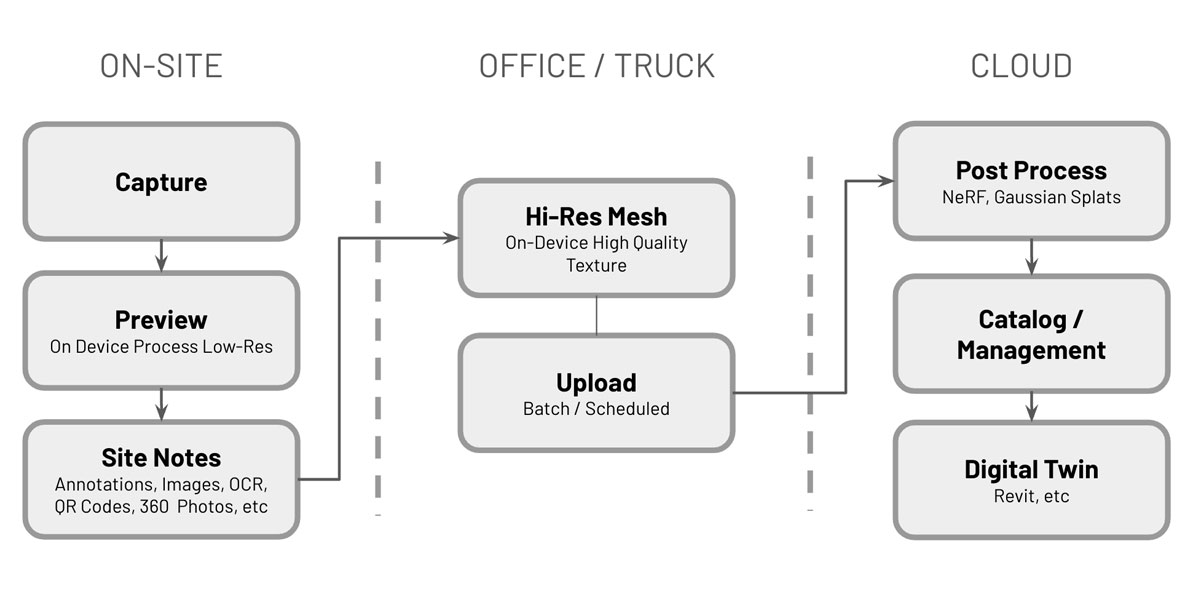

Laan Labs worked with the Telco's business process to develop a workflow for capture, on-device processing of meshes and the uploading data sets to cloud for other rendering displays for continued data management. A general overview of the process can be found in the workflow below:

The general approach would be for the iOS Device to complete a capture and create a "Ground Truth" dataset. This dataset would be stored on the cloud so it could also be used to reprocess 3D representations as technologies improve.

- Photoset - RGB Images with Pose Data

- Depth Data, LiDAR Confidence

- Meta Info - GPS, Date, User, etc

- Annotations – key images, site notes, text, Bar/QR Codes, etc

Evaluation

To evaluate the various outputs, comparisons were performed with the same capture dataset so each output could be used for what it was best suited for in helping manage the asset.

| Types | Metrics |

|-----------|-------------|

| • Point Cloud

• Mesh - (On-Device, Photogrammetry Post-Process)

• 360 Photo / Virtual Tour

• Semantic Geometry (i.e. RoomPlan)

• NeRF

• Gaussian Splatting

• Digital Twin - Synthetic | • Processing Time / Cost

• Dimensionality (i.e. can you measure)

• Visual Acuity

• Loss Metrics (Holdout Images) |

Implementation of Outputs

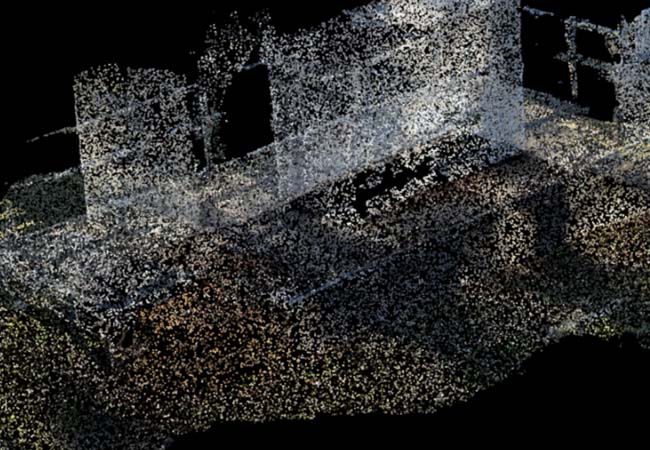

Point Cloud

Using point cloud render of 3D Points is a format many industry professionals are familiar with and the RGB info does provide some visual insight into assets captured. Why this representation is often preferred is its "Raw" Data aesthetic and representation. That no post-processing or View Synthesis has distorted any of the collected data. This representation is good for managing the dimensionality characteristics of the assets. The images collected during the capture can be referenced for additional information.

LiDAR Mesh

A processed mesh from a capture is a good output providing asset dimensionality along with general shape and structure (i.e. measuring, etc.). The meshing process can often result in the loss of mesh on fine details and does not deal well with reflective surfaces. Additionally, when synthesizing and blending surface textures, various issues can arise that distort texture imaging making reading fine details and text not possible.

Photogrammetry

Creating a mesh from only RGB Images without the aid of any depth data. As many mobile devices do not have LiDAR or similar depth information this is a good fallback method that is Device Agnostic. Similar to drone capture to get good scale, markers need to be used. This processing is typically done on the cloud and on-device LiDAR captures can be post-processed on the cloud to often improve texture resolution.

360 Photo / Virtual Tour / Annotated Images

In the cases where the most Visual Fidelity is required, 360 Photos and Annotated Images are used. 360 Degree Photos are created from asking the user to spin in 360 arc while standing in a single point during the capture process. These images are often used in Virtual Tours. When close up details are required, the user can create a 3D annotation image by clicking on a target object during a scan. This is typically the best method for the capture of clear text for later OCR or Bar / QR Code detection.

Semantic Geometry

Semantic Geometry extracts the dimensioned geometry and identifies objects during capture where the result is a 3D CAD file rather than a mesh or point cloud. While not implemented on this asset, this is technology quickly improving as demonstrated with Apple's RoomPlan. This technology is optimized for interior captures currently but is rapidly improving for exteriors and other content types.

NeRF (Neural Radiance Fields)

NeRF, or Neural Radiance Fields, refers to a novel type of neural network-based model for synthesizing 3D scenes. This approach is useful for its ability to create highly detailed and photorealistic renderings of complex scenes. However it has poor dimensionality (i.e. its not the best for measuring though there is good progress on this) and it currently has a high cost in terms of cloud processing time and expense. These costs are rapidly changing with workflows like instant NGP and should soon be on par with Photogrammetry.

Gaussian Splatting or Gaussian Splats

Gaussian splatting is like taking a bunch of dots and blurring them together with a soft brush to make the picture smoother and less choppy. It's a cool trick used in 3D graphics and other tech stuff to fill in the gaps and make everything look more natural. Its a lot more cost effective and quicker to produce than a NeRF, but it can not be used to measure as all the information is really just being projected onto a 2D plane. This could change as there is good research on aligning Meshes and Splats.

Gaussian Splatting is like using a digital airbrush to blend scattered dots into a smooth picture, perfect for making 3D graphics look less blocky. NeRFs, on the other hand, are like teaching a computer to create super realistic 3D scenes from regular photos, capturing all the tiny details and lighting effects.

Digital Twin - Synthetic

A 3D CAD or synthetic Digital twin is the cleanest visual representation and it works with CAD software like Revit, etc. Though it's not accurate of condition, its a format many engineers prefer to work with because of its interoperability. This output is typically not automatically generated and usually involves a 3D engineer taking a point cloud or mesh recreating the asset in a CAD program like Revit, SketchUp or something similar. The drawing below was not associated with this asset, it is important to mention in terms of the other outputs as it is used in this TelCo's workflow.

Conclusion

Laan Labs was able to implement a successful solution developing a highly customized workflow with a licensed version of 3D Scanner App as the base toolset. The system works well with their other collection methods from Drones and High-Precision LiDAR scanners. If you have any questions don't hesitate to get in touch at labs@laan.com

Technologies Utilized

Computer Vision, Neural Networks, Cloud Services, NeRF, Gaussian Splatting